Apple Faces Major Lawsuit Over Dropped CSAM Detection Feature

Apple Faces Major Lawsuit Over Dropped CSAM Detection Feature. Apple is under fire for its 2022 decision to abandon a planned child sexual abuse material (CSAM) detection system. A lawsuit, spearheaded by a survivor of childhood sexual abuse, accuses Apple of failing to protect victims by canceling the feature. This legal battle highlights the tension between privacy, corporate responsibility, and the fight against child exploitation.

The Abandoned Plan

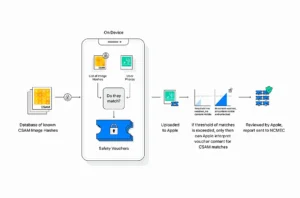

In 2021, Apple announced plans to roll out a CSAM detection feature designed to scan iCloud photos for abusive content. This innovative system was intended to operate on-device, comparing photo hashes to a database of known CSAM images while preserving user privacy. Apple aimed to strike a balance between protecting vulnerable individuals and safeguarding user data.

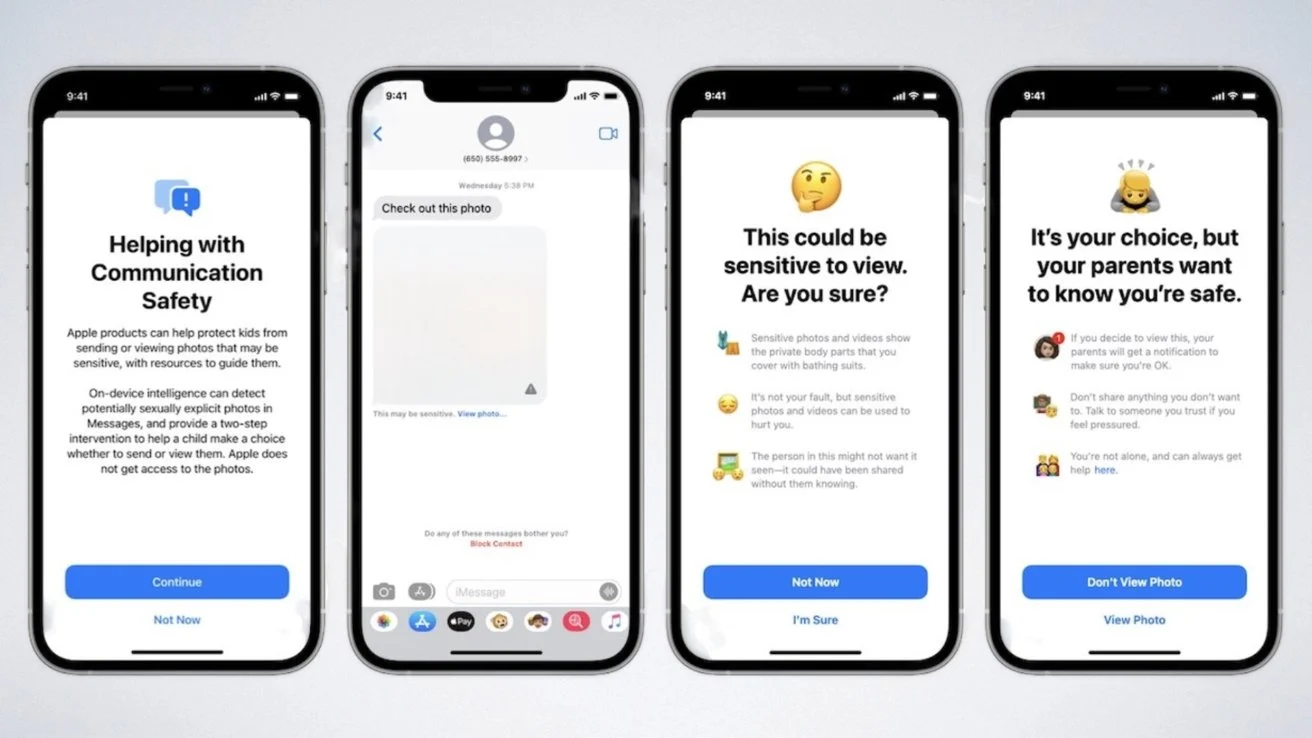

In addition, Apple introduced the “Communication Safety” feature, which uses artificial intelligence to detect nudity in photos shared through Messages. This feature warns users, particularly children, before they view or send explicit content.

While the Communication Safety feature remains active, Apple canceled its CSAM detection plans in 2022 after facing significant backlash. Privacy advocates argued that the system could be exploited by governments for surveillance, while child safety groups criticized the move as a step back in combating online exploitation.

The Lawsuit

A 27-year-old woman, identified under a pseudonym, is suing Apple for abandoning the CSAM detection system. As a survivor of sexual abuse, she claims the feature’s removal has allowed the continued circulation of images related to her case through iCloud. Her lawyers argue that Apple’s decision constitutes a failure to fulfill its commitment to protecting victims of exploitation.

Apple Faces Major Lawsuit Over Dropped CSAM Detection Feature

The lawsuit seeks to represent up to 2,680 potential victims, each eligible for a minimum compensation of $150,000 under federal law. If successful, Apple could face damages exceeding $1.2 billion.

The Allegations

The plaintiff alleges that by discontinuing the CSAM detection feature, Apple has enabled the spread of abusive material, effectively selling “defective products” that fail to protect users. Her legal team uncovered over 80 instances of images from her case being shared via Apple’s services.

Apple Faces Major Lawsuit Over Dropped CSAM Detection Feature

One example cited in the lawsuit involves a Bay Area man found in possession of over 2,000 CSAM images and videos stored on iCloud. Critics argue that such incidents could have been mitigated if Apple had implemented the detection system.

Industry Comparison

The lawsuit also highlights how competitors like Google and Meta have implemented robust CSAM detection systems. These companies utilize advanced algorithms to identify and remove abusive content, significantly reducing its spread on their platforms.

Apple Faces Major Lawsuit Over Dropped CSAM Detection Feature

By contrast, Apple’s focus on user privacy has limited its ability to detect and address CSAM. While the Communication Safety feature is a positive step, experts argue it is insufficient to combat the scale of the problem.

Legal Challenges

Apple is facing additional legal scrutiny in a separate case in North Carolina. A nine-year-old victim alleges that iCloud was used to distribute CSAM, including material sent to her by strangers who encouraged her to produce and upload similar videos.

In response, Apple has invoked Section 230 of the Communications Decency Act, which protects tech companies from liability for user-generated content. Apple also claims that iCloud is not a standalone product and is therefore not subject to product liability laws.

Apple Faces Major Lawsuit Over Dropped CSAM Detection Feature

However, recent rulings by the US Court of Appeals for the Ninth Circuit have challenged this interpretation. The court determined that Section 230 protections may not apply in cases where content moderation is insufficient, potentially leaving Apple vulnerable to liability.

Apple’s Response

Apple spokesperson Fred Sainz defended the company’s commitment to fighting CSAM, stating, “We believe child sexual abuse material is abhorrent, and we are urgently innovating to combat these crimes without compromising user privacy.”

Apple has expanded its Communication Safety feature to more services, allowing users to report harmful material directly to the company. Despite these measures, critics argue that Apple’s efforts fall short compared to its initial promise.

Apple Faces Major Lawsuit Over Dropped CSAM Detection Feature

Broader Implications

This case raises critical questions about the responsibilities of tech companies in addressing online exploitation. While privacy advocates applaud Apple’s decision to prioritize user data security, victims’ rights groups argue that this approach leaves vulnerable individuals unprotected.

The lawsuit could have far-reaching consequences for Apple and the tech industry. A ruling against Apple may set a precedent, compelling companies to adopt more aggressive measures against CSAM. On the other hand, a win for Apple could reinforce the importance of privacy in digital spaces, even at the expense of certain safeguards.

Apple Faces Major Lawsuit Over Dropped CSAM Detection Feature

Moving Forward

As Apple navigates this legal battle, the company faces mounting pressure to balance user privacy with the need for effective CSAM detection. The outcome of this case will likely influence how tech companies address similar challenges in the future.

The lawsuit also highlights the broader debate over the role of Big Tech in combating online exploitation. With billions of users worldwide, companies like Apple, Google, and Meta wield immense power—and responsibility—in shaping the digital landscape.

For Apple, the stakes are high. How the company responds to these allegations will not only impact its reputation but also set a precedent for how tech giants address the delicate balance between privacy and safety in the digital age.

Apple Faces Major Lawsuit Over Dropped CSAM Detection Feature

Apple Faces Major Lawsuit Over Dropped CSAM Detection Feature

Apple Faces Major Lawsuit Over Dropped CSAM Detection Feature